Balanced Value Impact Model at #LibPMC

|

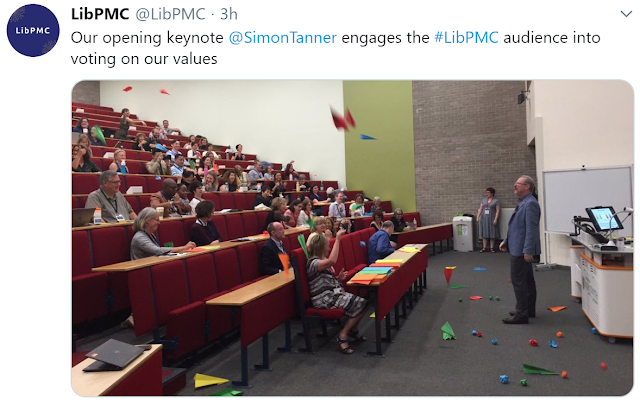

| Image courtesy of @LIbPMC conference |

See the conference hashtag #LibPMC and website for more information.

The slides for this talk are available on Slideshere:

During the keynote I held a voting session on the Value Lenses in the BVI Model. The question asked was:

Which Value most represents your personal sense of the highest priority for digital collections?

The audience voted using a colour-coded voting system of paper planes or scrunched up paper and threw their votes to the front of the room. I have gathered the votes and the results are:

- Education = 49 votes

- Utility = 23 votes

- Community = 19 votes

- Inheritance / Legacy = 16 votes

- Existence /Prestige = 5 votes

We had some joint votes for Utility/Comunity, Utility/Education and Education/Community. This reflects the slightly artificial choices the voting was forcing participants into.

My fave vote, showing great creativity, was this one:

Here's a clip of the voting, thanks for joining in everyone!

Here's a clip of the voting, thanks for joining in everyone!

Answering Questions

I also committed to answering questions posed by the sli.do system that there was not time to engage with in the presentation time itself. Here are the questions and my answers (sources cited at end).Many of these answers are based upon my book due for publication this Autumn.

Delivering Impact with Digital Resources - Planning your strategy in the attention economy

Q: Surely the glass (drinking vessel), is very important too?

See here for more information on the thought experiment: Is the value in the wine, the glass or the drinking?Q: What is the attention economy?

In an information-rich world, the wealth of information means a dearth of something else - the attention of its recipients to attend to and engage with the information. What we take notice of, and the regarding of something or someone as interesting or important, delineates what we consider worthy of attending to and thus defines our economics of attention.Herbert A. Simon first articulated the concept of attention economics:

"When we speak of an information-rich world, we may expect, analogically, that the wealth of information means a dearth of something else -- a scarcity of whatever it is that information consumes. What information consumes is rather obvious: it consumes the attention of its recipients. Hence a wealth of information creates a poverty of attention, and a need to allocate that attention efficiently among the overabundance of information sources that might consume it."

Simon, H. A. (1971) ‘Designing organisations for an information-rich world’, in Computers, communications, and the public interest, p. 37. Available at: https://digitalcollections.library.cmu.edu/awweb/awarchive?type=file&item=33748.

Q: If you wanted to measure value of a library in a university from different stakeholder perspectives, what methods would you use... interviews, surveys, etc.?

This very much depends upon your Strategy/Value pairings and thus what you are setting out to measure defines the best way to measure that thing. It is likely that the means of measurement will not be surprising and will include things like web metrics, surveys, interviews, focus groups etc.An example to illustrate:

If considering a University with a digital collection relating to a famous historical figure, then the Perspective/Value pairings for Social Impact might require an investigation of:

- Strategic Perspective: Social

- Value Lenses: Education + Prestige + Inheritance/Legacy

So to measure Education may be about looking at awareness and knowledge in a before and after kind of way - that could be actively tested or based on a questionnaire for instance. Inheritance/Legacy is a qualitative measure of an opinion of intangible value. Therefore some sort of valuation is sought and measures such as case studies or consumer surplus value might be useful.

There is not one way or method which is the heart of the BVI Model. work through Stage 1 Setting the Context and this will reveal the things to measure for your specific institution and circumstances and the methods will flow from those things you seek to measure.

Q: How do you measure the societal impact of a laboratory doing basic scientific research?

The obvious answer is to look at the societal benefit of the research outcomes. This will depend very much on the laboratory and the science being done. health-based measures may use QALYS for instance. Note that the Wellcome Trust estimates an average of 7 years from research paper to clinical practice and another 7 years possibly to show the beneficial impacts on patient health and wellbeing. So it will take a long time and will not be a quick measure.The US NSF has some useful web pages on the societal impact of science.

See also for the BVI Model this:

Estimating the value and impact of Nectar Virtual Laboratories (Sweeny, Fridman and Rasmussen, 2017)

Q: Is there a collection of case studies available that show how the Balanced Value Impact Model has been put into practice? (To help connect theory to practice)

The Europeana Impact Playbook and the Europeana impact pages are the best places to start.Other implementations I am aware of:

- Europeana’s Impact Playbook (Verwayen et al., 2017)

- The Wellcome Library digitisation programme (Tanner, 2016c)(Green and Andersen, 2017)

- The People's Collection Wales with the National Museum Wales, the National Library of Wales and the Royal Commission on the Ancient and Historical Monuments of Wales (Vittle, Haswell-Walls and Dixon, 2016)

- Estimating the value and impact of Nectar Virtual Laboratories (Sweeny, Fridman and Rasmussen, 2017)

- A Twitter Case Study for Assessing Digital Sound (Giannetti, 2018)

- Implementing resource discovery techniques at the Museum of Domestic Design & Architecture, Middlesex University (Smith and Panaser, 2015)

- Jisc Training and Guidance: Making your digital collections easier to discover (Colbron et al., 2019)

- Museum Theatre Gallery, Hawke's Bay (Powell, 2014)

- Valuing Our Scans: Understanding the Impacts of Digitized Native American Ethnographic Archives. A research project led by Ricardo L. Punzalan at the College of Information Studies at University of Maryland.

- Developing impact assessment indicators – making a proposal for the UK Web Archive. Julie Fukuyama & Simon Tanner. Slides here, paper to follow:

https://www.slideshare.net/KDCS/julie-fukuyama-simon-tanner-developing-impact-assessment-indicators-making-a-proposal-for-the-uk-web-archive

When my book is out there will be an accompanying website with exemplars and templates.

Q: Can the model be applied to other things apart from digital collections?

Yes it can. It was specifically focussed on digital collections but it can be applied (see answer above) in other contexts.

Q: What's the best way to form SMART objectives in the BVIM if you don't already have a good body of data available?

Start slow and be purposeful about your decision making!! Work through Stages 1-2 of the model and then once Objectives are set the indicators will become more clear. Give yourself time to develop these ideas and set a long enough time to measure over to show change.

In order to measure a change in anything, it is necessary to have a starting point, a baseline. Baselines are usually set in time but can also use something like service level provision (e.g. 200,000 Instagram followers with an average engagement rate per post of 3.8%). A baseline date is whatever the activity owner wishes it to be as a point of reference for change measurement to be set. Using the outcomes of the BVI Model to set new benchmarks for service provision that are also baselines for future measurement will help to set realistic, focused performance targets.

In Stage 2 the key components are:

- Objectives

- Assumptions

- Indicators

- Stakeholders

- Data Collection

An Objective is an impact outcome that can be reasonably achieved within the desired timeframe and with the available resources. Objectives come from combining the factors explored in Stage 1. Objectives should be expressible in a short, pithy statement that expresses what is to be measured and what the outcome would encompass. The statements are specific to the Value/Perspective pairing and measurable through the Indicators and Data Collection methods selected.

Assumptions: No one can measure everything, for all time, in every way possible. As such there are areas that are deliberately not investigated, or there are assumptions made that certain conditions, skills or resources are already in place. For instance, in the examples of Objectives given above, the assumptions might include access to an internet-enabled computer or participation in community activities. The listing of assumptions is helpful to ensure the assessment process is not corrupted from the beginning by an unseen bias or assumption (‘everyone has a mobile phone these days’) which might either exclude stakeholders or skew the measurement.

Indicators and data collection: Indicators should be specific to the Objectives. There should be as few Indicators as practicable to demonstrate the change investigated in the Objective. Indicators must be SMART [Specific, Measurable, Achievable, Realistic and Timely) and thus the Data Collection methods to support them become more straightforward to define and design.

Despite the many methods available, most Data Collection methods fall into a few basic categories: surveys, questionnaires, observation, focus groups, feedback, and participatory investigations. The focus of the BVI Model upon digital resources also provides some advantages not necessarily available to other impact assessments, most notably the opportunity to reach out digitally to the user base through the digital resource itself or to use Web analytics or social media measures to assess change in usage patterns in response to interventions.

There is a tendency to measure what is easy to measure or to automate. Stage 1-2 of the BVI Model would be useful as an audit process for the current status quo and to lead the discussion of the needs of the customer. It requires a commitment to work over longer timescales than initial sales and support and to go beyond mere measures of user satisfaction.

Just avoid delivering products with dashboards that show lots of numbers that are in fact fairly meaningless and all will be fine!

Q: Is the order in the BVI model with 'operational' and 'innovation' on top indicative that these are emphasized more compared to 'economic' and 'social'?

No, that just how the artist I work with drew them. They are grouped together, with Operational and Innovation being more inward looking and Economic and Social being more outward looking.

Q: How do you get an institution to be ready for evidence based research?

I wish I knew! I can only describe what it looks like and some ways to influence upwards.

Using evidence and information as a way to gain a change in management or executive decision-making is a matter of understanding how to deploy them. Most effective methods will focus upon evidence of a benefit to promise a rewarding outcome or will use evidence upon an issue that acts as a lever to force a change in attitude or behaviour.

Relationships drive influence; evidence and pivotal stakeholder groups drive policy. Align these factors to become an effective advocate for the desired change.

The heart of evidence-based approaches for improved organisational performance is the commitment of leaders to apply the best evidence to support decision-making. Leaders set aside conventional wisdom in favour of a relentless commitment to gather the most useful and actionable facts and data to make more informed decisions. Evidence-based management is a humbling experience for leaders when it positions them to admit what they do not know and to work with their team to find the means of gathering that information. Evidence-based management is naturally a team-oriented and inclusive practice as there is little room for the know-it-all or for an exclusively top-down leadership style. Good managers act on the best information available while questioning what they know.

Q: Are these your cartoons? :-)

See the last slide of my presentation. The drawings are made by the artist Alice Maggs working to my brief of what research ideas I want to express. Her work is fabulous, see her web page here: https://alicemaggs.co.uk/

Q: Our institution is stuck in a bad relationship with KPIs. How do we help them see a better future?

You have my sympathies and I would say this is not particularly unusual.

Using the BVI Model to help your organisation think through what to measure, why that and what you will do with the data would be helpful.

Essential to this is having a clear understanding of avoiding changing those things measured by how they are measured. It is human nature to treat any form of measurement as a target to reach or a performance metric to exceed. Sometimes known as the Observer Effect or the Hawthorne Effect it refers to changes that the act of observation makes on an observed phenomenon. As Goodhart’s Law succinctly states: ‘when a measure becomes a target, it ceases to be a good measure’ and Campbell’s Law speaks to social consequences: ‘the more any quantitative social indicator is used for social decision-making… the more apt it will be to distort and corrupt the social processes it is intended to monitor’. Impact measures should seek to influence the strategic context of the organisation only in response to the results gathered, not in response to the act of measurement itself, otherwise gaming the system to achieve desired results becomes a real and negative possibility.

The key to change may be looking at the system or process for how the KPI is set - in short, is this a process problem or a people problem. If you can find that out then you can plan actions to affect either the process or the people driving that process. Good luck!

Q: We are used to digitize our collections for the future. How can we use impact assessments for our future users?

The BVI Model is best suited for memory institutions and presumes that the assessment is mostly (but not exclusively) measuring change within the ecosystem of a digital resource.

A user’s journey through the BVI Model to framework might look like this:

a. In thinking about the memory institutions strategic direction, they map their existent documents/policies/vision-statements to the 4 Strategic Perspectives.

b. They can then use these to focus upon their context, investigating the digital ecosystem, the stakeholders and to analyse the situation of the organisation further.

c. These provide a strategic context that allows for decision-making as what is to be measured and why that measurement is needed.

d. The practitioner can then use the Value Lens to focus attention on those aspects most productive for measurement in that context.

Figure 2.5 illustrates the measurement goals focused via the Strategic Perspectives and Value Lenses.

e. Then the Framework (often a spreadsheet) can be completed for each Value/Perspective Lens pairings.

f. This output is built into an Action Plan for implementation at Stage 3 where the integration of what needs to be measured is related to the practicalities of time, money and other resources.

g. Once all this planning is achieved, then the implementation is phased in according to the Framework and data is gathered alongside any activity measured. The timeframe might be a few months for one measure and more extended periods for others – the Framework helps to keep it all in sync and not lose track of the varied activities.

h. Once a critical mass of data is gathered such that results can be inferred then the process can start to move into the analysis; narrated via the four Strategic Perspectives: Social, Economic, Innovation and Operational.

i. This narrative acts as a prompt to decision-makers and stakeholders to guide future direction and to justify current situations.

j. The stage of revision, reflection and respond assume that there is embedded continuous learning to allow for decisions made in every stage to be informed by the results and to be modified in response to change, to feedback and success criteria.

The BVI Model does not mix well with the Theory of Change models as these attempt to induce a specific desired impact in a very directive fashion. Also, community action models of social impact require the starting focal point to be the community itself and not the organisations that serve them. They should, by their nature, be driven purely by the concerns of that community which may quite possibly exclude the library or museum from a central role in their considerations.

Application of the BVI Model is driven primarily by the needs of the memory institution that is responsible for the digital resource. The stakeholders are a crucial part of the context and drivers for why the impact assessment happens, but essentially the BVI Model is intended as an organisation-led tool. The BVI Model is thus not a community action model in its conception.

Q: From a research intensive university I am interested in your thoughts on where research fits across the values that you presented to us, thx

I would suggest that the UN Sustainable Development Goals would be a better global mapping of values to the research agenda than the reduced list within the BVI Model. All the Value Lenses in the BVI Model map to the UN SDG's but for a research-intensive university, there may be further areas to explore (health, public policy, etc.). We have used this at my home institution, King's College London, as a way to guide some of our thinking about research impact institutionally.

See here for instance:

https://wonkhe.com/blogs/whats-wrong-with-public-engagement/

https://www.timeshighereducation.com/opinion/measuring-social-impact-allows-universities-be-held-accountable

Thank you all for having me at your conference LibPMC! Please comment below or ask more questions in comments and I will continue to engage on this topic.

Resources cited

Colbron, K., Chowcat, I., Kay, D. and Stephens, O. (2019) Making your digital collections easier to discover, Jisc Guide. Available at: https://www.jisc.ac.uk/full-guide/making-your-digital-collections-easier-to-discover.

Giannetti, F. (2018) ‘A twitter case study for assessing digital sound’, Journal of the Association for Information Science and Technology, 00(00). doi: 10.1002/asi.23990.

Green, A. and Andersen, A. (2017) ‘Museums and the Web Finding value beyond the dashboard’, Museums and the Web 2016, pp. 1–8. Available at: http://mw2016.museumsandtheweb.com/paper/finding-value-beyond-the-dashboard/.

Powell, S. (2014) Embracing Digital Culture for Collections, Museums Aotearoa. Available at: https://blog.museumsaotearoa.org.nz/2014/08/11/embracing-digital-culture-for-collections-by-sarah-powell/.

Smith, S. and Panaser, S. (2015) Implementing resource discovery techniques at the Museum of Domestic Design & Architecture , Middlesex University - Social Media and the Balanced Value Impact Model, Jisc ‘Spotlight on the Digital’ project. Available at: http://eprints.mdx.ac.uk/18555/1/case_study_resource_discovery_moda.pdf.

Sweeny, K., Fridman, M. and Rasmussen, B. (2017) Estimating the value and impact of Nectar Virtual Laboratories. Available at: https://nectar.org.au/wp-content/uploads/2016/06/Estimating-the-value-and-impact-of-Nectar-Virtual-Laboratories-2017.pdf.

Tanner, S. (2016c) ‘Using Impact as a Strategic Tool for Developing the Digital Library via the Balanced Value Impact Model’, Library Leadership & Management, 30(4).

Vittle, K., Haswell-Walls, F. and Dixon, T. (2016) Evaluation of the Casgliad y Werin Cymruu / People’s Collection Wales Digital Heritage Programme. Impact Report.

The BVI model is a significant advance on previous attempts to capture impact. I would like to see institutions encouraged to look at longer term impacts through follow ups to find out whether the users perception of the resource had changed over time, and whether they had altered their behaviour/opinions/ etc as a result. I would also encourage the use of qualitative research methods to flesh out statistical evidence with rich narratives about the users and their interaction.

ReplyDeleteThanks a lot for this great stuff here. I am very much thankful for this site.

ReplyDeleteI like this post. quite informative blog. Keep blogging.. thanks

ReplyDeleteI will bookmark this blog and check here again. Keep doing your blog post

ReplyDeleteThank you for this fascinating post, Keep blogging. Keep writing

ReplyDelete