Text Capture and Optical Character Recognition 101

What is text capture

Text capture is a process rather than a single technology. It is the means by which textual content that resides within physical artefacts (such as in books, manuscripts, journals, reports, correspondence etc) may be transferred from that medium into a machine readable format. My focus here is on the capture of text from digital images that have been rendered from physical artefacts. Such digital images may be made via scanners or digital cameras and stored as digital page images for later access and use.In some cases digital images of text content are sufficient to satisfy the end users information needs and provide access to the resource in an electronic format that can be shared online. This sort of digital presentation of text resources is very useful for documents where transcribing the content would be difficult, such as for handwritten letters or personal notes, but it can be done for any type of text. The reader must make their own recognition of the text to render it meaningful and may have an easy time doing this or not. However, to enable other computer based ways to use the text content – such as for indexing, searching, data mining, copying and pasting – then the text must be rendered machine readable.

Machine readable text may be gained through the various text capture processes listed below. Rather than presenting the end user with an electronic ‘photocopy’ of the page, the user is presented with a resource that includes machine readable text. This makes additional computing functions possible and the most important of these is the ability to index and search text. Without machine readable text the computer will not find that document on Chaucer or the pages that contain the words “Wife of Bath”. As a digital repository of text becomes bigger then the only efficient way to navigate through it is with search tools supported by good indexing and this is something that machine readable text makes practicable.

Thus text capture is a process that should be designed to add value to the text resource. Inherent within the concept of adding value is an assessment of whether the cost of delivering the benefit was equitable with the value added. Thus the more automated the capture process the easier it seems to justify the cost for the benefit across a large corpus.

The main methods for text capture are (in order popularity of use):

- Optical Character Recognition (OCR) – sometimes also known as Intelligent Character Recognition (ICR)

- Rekeying

- Handwriting Recognition (HR)

- Voice or speech recognition

Optical Character Recognition (OCR)

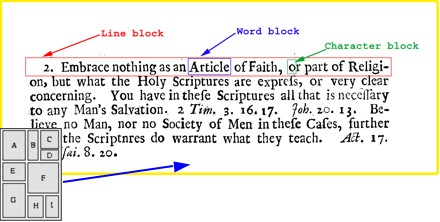

Optical Character Recognition (OCR) is a type of document image analysis where a scanned digital image that contains either machine printed or handwritten script is input into an OCR software engine and translated into a machine readable digital text format (like ASCII text).OCR works by first pre-processing the digital page image into its smallest component parts with layout analysis to find text blocks, sentence/line blocks, word blocks and character blocks. Other features such as lines, graphics, photographs etc are recognised and discarded.

The character blocks are then further broken down into components parts, pattern recognized and compared to the OCR engines large dictionary of characters from various fonts and languages. Once a likely match is made then this is recorded and a set of characters in the word block are recognized until all likely characters have been found for the word block. The word is then compared to the OCR engine’s large dictionary of complete words that exist for that language.

These factors of characters and words recognised are the key to OCR accuracy – by combining them the OCR engine can deliver much higher levels of accuracy. Modern OCR engines extend this accuracy through more sophisticated pre-processing of source digital images and better algorithms for fuzzy matching, sounds-like matching and grammatical measurements to more accurately establish word accuracy.

Gaining character accuracies of greater than 1 in 5000 characters (99.98%) with fully automated OCR is usually only possible with post-1950’s printed text (and not that frequently even then). Gaining accuracies of greater than 95% (5 in 100 characters wrong) is more usual for post-1900 and pre-1950’s text and anything pre-1900 will be fortunate to exceed 85% accuracy (15 in 100 characters wrong). Thus OCR for historical materials is usually hampered by the expensive and time consuming need to manually or semi-automated proofreading and correction of the text to gain as near to 100% as possible.

Optical Character Recognition as a technology is deeply affected by the following factors:

|

| Examples of factors that cause OCR problems |

- Scanning methods possible

- Nature of original paper

- Nature of printing

- Uniformity

- Language

- Text alignment

- Complexity of alignment

- Lines, graphics and pictures

- Handwriting

- Nature of document

- Nature of output requirements

OCR Accuracy Example

If our processes should orientate to the intellectual and user aims desired from that resource, then surely our means of measuring success should also be defined by whether those aims are actually achieved? This means we have to escape from the mantra of character accuracy and explore the potential benefits of measuring success in terms of words – and not just any words but those that have more significance for the user searching the resource. When we look at the number of words that are incorrect, rather than the number of characters, the suppliers' accuracy statistics seem a lot less impressive.For example, given a newspaper page of 1,000 words with 5,000 characters if the OCR engine yields a result of 90% character accuracy, this equals 500 incorrect characters. However, looked at in word terms this might convert to a maximum of 900 correct words (90% word accuracy) or a minimum of 500 correct words (50% word accuracy), assuming for this example an average word length of 5 characters. The reality is somewhere in between and probably more at the higher extent than the lower. The fact is: character accuracy of itself does not tell us word accuracy nor does it tell us the usefulness of the text output. Depending on the number of "significant words" rendered correctly, the search results could still be almost 100% or near zero with 90% character accuracy.

The key consideration for newspaper digitization utilizing OCR is the usefulness of the text output for indexing and retrieval purposes. If it were possible to achieve 90% character accuracy and still get 90% word accuracy, then most search engines utilizing fuzzy logic would get in excess of 98% retrieval rate for straightforward prose text. In these cases the OCR accuracy may be of less interest than the potential retrieval rate for the resource (especially as the user will not usually see the OCRed text to notice it isn't perfect). The potential retrieval rate for a resource depends upon the OCR engine's accuracy with significant words, that is, those content words for which users might be interested in searching, not the very common function words such as "the", "he", "it", etc. In newspapers, significant words including proper names and place names are usually repeated if they are important to the news story. This further improves the chances of retrieval – as there are more opportunities for the OCR engine to correctly capture at least one instance of the repeated word or phrase. This can enable high retrieval rates even for OCR accuracies measuring lower than 90%.

So, when we assess the accuracy of OCR output, it is vital we do not focus purely on a statistical measure but also consider the functionality enabled through the OCR's output such as:

- Search accuracy

- Volume of hits returned

- Ability to structure searches and results

- Accuracy of result ranking

- Amount of correction required to achieve the required performance

Rekeying

Rekeying is a process by which text content in digital images is keyed by a human directly via keyboard. This is differentiated from copy typing by the automation and industrialization of the process. Rekeying tends to be offered by commercial companies in offshore locations with India being the leading outsourcing supplier to the United Kingdom.Rekeying is usually offered in three forms:

- Double rekeying

- Triple rekeying

- OCR with rekeyed correction

Triple rekeying would expect to achieve accuracy levels of 1 in 10,000 characters incorrect (99.99% character accuracy) whilst double rekeying should achieve between 1 in 2500 and 1 in 5000 characters incorrect (99.96 – 99.98% character accuracy).

In the latter option of OCR with rekeyed correction then the text is OCR'd and where the confidence of the engine is lower than a set parameter or if words do not appear in a dictionary then these are inspected by a rekeying operator and corrected manually as required. This normally will deliver in the 99.9% or above accuracy level depending on the nature of the text. For instance, this mode will generally not be as successful for numeric or tabular texts as these are problematic formats for OCR throwing so many potential corrections at the operator that double/triple rekeying would be more efficient.

Two main issues with rekeying

- First, it appears relatively expensive because every character carries a conversion cost and thus the direct costs of capture are very apparent. However, the accuracy reached is very high and generally rekeying is cheaper than OCR when the same accuracy is expected. This is because correcting and proofreading OCR is most often more costly than rekeying with cheap offshore services.

- The second and more problematic issue is the need for an extremely clear specification that accounts for all the variations and inconsistencies in the originals. This is to avoid the rekeyers having to make judgments or interpretations of the text. Tanner’s First Law of Rekeying states “operators should only key what they actually see, not what they think”. This is to avoid assumptions, guesses and to avoid misspelt words in the historic original being ‘corrected’ by the rekeying and thus removing the veracity of the text. As Claude Monet said "to see we must forget the name of the thing we are looking at" and for rekeying this is a challenge that is overcome by a detailed specification that removes from the keying operator the need to understand any context or language but just to key what characters they see. Detailed specifications are hard to write and require a large commitment of time and effort before the project has even got underway.

Handwriting recognition

The specialist conversion of handwriting to machine-readable text is referred to as handwriting recognition (HR). The type of HR used in tablets or your smartphone is comparatively accurate because the computer can monitor the characters whilst they are being formed. The form of HR which converts handwriting from retrospective digital images is much more analogous to OCR in looking for word and character blocks. It is thus less accurate due to the sheer number of handwriting styles and the variation within even one person’s style of writing. Most HR is done on forms (e.g. tax or medical forms) specially designed to control the variation in a person’s handwriting by using boxes for each letter and requiring upper case.I am yet to see a commercial or open source software for automatic transcription of, or the creation of searchable indexes from, handwritten historical documents that is really technically efficient and provides a significant cost efficiency over rekeying. I'm willing to stand corrected so add comments if you know better.

Voice recognition

Voice recognition is a form of transcription where the human operator talks into a microphone connected to their computer and the software translates this into machine readable text. This is relatively inefficient as a human generally talks more slowly than a fast copy typist and there is also a high rate of inaccuracy for any word not normally in the dictionary, such as place names. This may be of use to academics with small amounts of text to transcribe. I merely record the existence of this method for completeness but discard it as generally too inefficient for historical documents.Deciding on the appropriate method for your needs

Deciding upon a suitable text capture method for a project will be defined by available time and resource balanced against project goals. For instance, it is quite acceptable to deliver just an image of a page of text and allow the end user to read it for themselves. This is cheap and relatively easy to deliver, but lacks a lot of functionality that having machine readable text can offer.Some benefits of machine readable text are:

- reuse, editing and reformatting of content;

- full text retrieval provides ease of access;

- indexing of content may be automated;

- metadata extraction and interaction with other systems;

- enabling mark-up into XML or HTML;

- accessibility to text content for visually impaired users; and

- delivering content with a much lower bandwidth requirement

Please note though that this is just one chain, there are a number of possible combinations. The Stop Points also give a broad indication of the level of activity and effort required to achieve them – the further down the tree the more effort (and thus more potentially costly) the Stop Point is likely to be. Projects that have decided upon which Stop Point they are aiming for can then choose the most appropriate text capture method.

- Stop Point One: – represents just delivering the content via digital images. No text capture required.

- Stop Point Two: Indexing – the text is imported to a search engine and used as the basis for full text searching of the information resource. OCR only.

- Stop Point Three: Full text representation – in this option the text is shown to the end user as a representation of the original document. This requires a much higher level of accuracy to be acceptable and thus assumes validation of captured text. OCR or rekeying.

- Stop Point Four: Metadata – all of the above Stop Points would benefit from additional metadata to help describe and manage the resource. Manually added to the above resources with some automatic extraction possible.

- Stop Point Five: Mark-up – content is presented to the end user with layout, structure or metadata added via XML mark-up.

Another feature of the above flow is that included in all stages are the collection assessment, preparation and scanning. It is essential to assess the collection to identify its unique characteristics. These may be physical or content driven characteristics, but these unique features will drive the digitisation mechanism and help define the required provision and access routes to the electronic version.

There are no standard digitisation projects and the defining the nature of the original materials to be digitised is the essential first step of any conversion project. Without the steps then none of the other steps should be considered.

What digital libraries in the world go as far as Stop Point Five? I only know of Wikisource.org.

ReplyDeleteOld Bailey Session Papers would be a good example. Also pretty much any TEI related project you could think of. Some examples of when it might be used below:

Deletehttp://www.oldbaileyonline.org/ admittedly rekeyed & OCR'd content

http://www.finerollshenry3.org.uk/home.html structured data

Simon, we use OCR in BoB (www,bobnational.com) to convert the subtitles, which are broadcast as bitmaps, into text. This machine readable text is time stamped and is searchable as time based metadata.

ReplyDeleteBrilliant example, thank you Tony.

DeleteBig fan also of BUFVC. We experimented with speech to text from video and film and it was sometimes good, sometimes quite poor according to the context of the video (news and sports commentary was good - voxpops on the street, drama etc = bad).

Great post! Our project Purposeful Gaming and BHL (http://biodivlib.wikispaces.com/Purposeful+Gaming) is combining both OCR and rekeying of text. We create 2 OCR outputs from different software for a single page and compare the differences. Those differences are then sent to 2 online games where the public crowdsources the correct words for us. This article has given me some thought about prioritizing the differences rather than sending them all to the game. e.g. the common function words probably don't need verification as much as words users will actually search on. Thanks for the food for thought!

ReplyDeleteGlad it could help - yes prioritisation would give you a much better efficiency. Also the process you describe (whilst you are using crowdsourcing to decide the correct words) is exactly what the OCR engines are doing inside themselves. The most expensive OCR packages are lining up 5, 7 or even 15 algorithms that then vote on the outcome based on probability - a kind of internal crowd.

DeleteThe Lincoln Archives Digital Project has been using good old fashioned keying by hand and sometimes using Natural Dragon, since everything is in cursive.

ReplyDeleteAll hail the Lincoln Archives Digital Project!

DeleteI did consultancy for you a while ago (goes and checks records... crickey) back in 2009 when it was all kicking off big time. You've done great work.

see http://trove.nla.gov.au/

ReplyDeleteTrove is a classic - what is most interesting to me is augmenting the OCR with corwdsourcing. See for instance:

Deletehttp://www.nla.gov.au/our-publications/staff-papers/trove-crowdsourcing-behaviour

Thank you for the post. Have you any experience with Transkribus? It's a text recognition programme especially for handwritten documents. I haven't used it myself, but it looks interesting.

ReplyDeletehttps://transkribus.eu/Transkribus/

Handwritten documents are particularly hard for obvious reasons. Any of these transcription tools depend upon having a large corpus with steady consistent handwriting or the same hand throughout. Otherwise variation gets in the way and rekeying becomes cheaper than correcting the recognition. The problem of pattern recognition is getting better but it is still mainly a task of comparison to a known source - for instance most OCR engines will work on over 2,000 exemplar instances (i.e. 2000 x of each letter x in each font). How do we build up such a set of comparable patterns in handwriting. There are some great examples but there is still much work to be done. See for example http://www.digipal.eu/

DeleteI have been working with transcripts created from the OCR in CONTENTdm and rekeying the corrections by hand. Most of the text is in table and spreadsheet format. At present, I am copying the text into Excel, cleaning up the data and formatting, then copying back into CONTENTdm. Very tedious. I need to find a better, faster way to clean up this text. Any suggestions?

ReplyDeleteHi Eileen

DeleteI'm assuming you've seen the ContentDM help pages here:

http://www.contentdm.org/help6/projectclient/ocr.asp

If the text original is table or spreadsheet format then you are in a world of OCR pain without many reasonable solutions that don't involve a lot of person time. I'm assuming you have more numeric or date or name based content if in tables - these are hard to deal with and more likely to have inaccuracies.

I would try getting to the original images if possible and running them through a professional OCR package such as ABBYY Finereader Pro (not the limited edition). That would give you more control.

You could also look at this as a volumteer opportunity and consider whether volunteers would be willing to do this type of task or even consider crowdsourcing (cheaper than it seems). Handballing the text is probably going to remain the best option I'm afraid.

I find a free online ocr, it's using tesseract ocr 3.02.

ReplyDeleteYou can include Intelligent Character Recognition Software too.

ReplyDeleteGreat post I would like to thank you for the efforts you have made in writing this interesting and knowledgeable article.

ReplyDeleteWormaxio

Nice Blog Post !

ReplyDeleteAwesome article. Thanks

ReplyDeleteGood to know your writing.

ReplyDeleteGood post..Company Formation in BVI

ReplyDeleteHi Simon Tanner,

ReplyDeleteFirstly, I want to thank you for this knowledgeable article. You described many processes of conversion in a very nice way. Nowadays many persons use OCR process. But I think to convert image to text for bulk of pages always take service from good data entry service provider if you cannot know the process of conversion by proper training of characters. After that to achieve accuracy, you have to cross check always with the help of good image to text proofreading software or manually. But I think to use the software is best. Voice recognition works good now if you pronounce properly but little bit tough. Thank you again for this valuable post.

Uncover the magic of extracting text from images and documents, you can check the image text converter. Dive into the world of OCR technology, transforming scanned text into editable and searchable content. Expand your knowledge and boost your productivity. Let's get started!

ReplyDeleteFantastic and educational blog!

ReplyDeleteTake My Online Course

Konya

ReplyDeleteKayseri

Malatya

Elazığ

Tokat

FNNTE

ağrı

ReplyDeletevan

elazığ

adıyaman

bingöl

MX64BJ

Great information you shared through this blog.

ReplyDeleteKeep it up and best of luck for your future blogs and posts.

ReplyDelete

ReplyDeleteThank you for sharing this useful information, I will regularly follow your blog.

Thanks for posting this valuable information, really like the way you used to describe.

ReplyDeleteistanbul evden eve nakliyat

ReplyDeletebalıkesir evden eve nakliyat

şırnak evden eve nakliyat

kocaeli evden eve nakliyat

bayburt evden eve nakliyat

2YC

izmir evden eve nakliyat

ReplyDeletemalatya evden eve nakliyat

hatay evden eve nakliyat

kocaeli evden eve nakliyat

mersin evden eve nakliyat

Sİ8YSS

FFFA9

ReplyDeleteİzmir Lojistik

Erzincan Evden Eve Nakliyat

Sivas Lojistik

Ardahan Evden Eve Nakliyat

Şırnak Parça Eşya Taşıma

A62F3

ReplyDeleteİzmir Evden Eve Nakliyat

Denizli Evden Eve Nakliyat

Çankaya Parke Ustası

Okex Güvenilir mi

Balıkesir Şehir İçi Nakliyat

Malatya Lojistik

Tokat Evden Eve Nakliyat

İzmir Şehir İçi Nakliyat

Adıyaman Şehirler Arası Nakliyat

5892E

ReplyDeleteOkex Güvenilir mi

Çerkezköy Boya Ustası

Manisa Şehir İçi Nakliyat

Kilis Şehir İçi Nakliyat

Kastamonu Parça Eşya Taşıma

Konya Parça Eşya Taşıma

Karapürçek Boya Ustası

Urfa Şehir İçi Nakliyat

Amasya Parça Eşya Taşıma

Feeling Great !! Such a useful content you have provided with us via this blog. Regards for sharing. Convert JPG to PNG for free.

ReplyDeletegdrggewewqedwedr

ReplyDeleteشركة مكافحة حشرات بالاحساء

35C35F0AC5

ReplyDeleteshow siteleri

شركة صيانة افران بالاحساء OX0QGq3Vd3

ReplyDeleteشركة صيانة افران JP8BnSUKqG

ReplyDeleteشركة عزل اسطح بالجبيل S39UvKevcZ

ReplyDeleteرقم مصلحة المجاري بالاحساء vNJ6fn21ND

ReplyDelete5686DC9B79

ReplyDeleteaethir stake

mitosis

dogwifhat

emojicoin

medi finance

rocketpool

tokenfi

puffer finance

galxe

شركة تسليك مجاري بالقطيف GZYq7SWibK

ReplyDeleteNice article.

ReplyDeleteشركة مكافحة حشرات بخميس مشيط jtB648o8R1

ReplyDelete8D9118A65F

ReplyDeleteucuz takipçi satın al

F633A10E81

ReplyDeletetwitter takipçi

Free Fire Elmas Kodu

Happn Promosyon Kodu

Dragon City Elmas Kodu

Kazandırio Kodları

Binance Referans Kodu

Coin Kazan

MLBB Hediye Kodu

Osm Promosyon Kodu

5FC1AFC0B4

ReplyDeletegarantili takipçi satın al

Total Football Hediye Kodu

Pubg Hassasiyet Kodu

Para Kazandıran Oyunlar

Rise Of Kingdoms Hediye Kodu

Pubg New State Promosyon Kodu

Erasmus Proje

101 Okey Yalla Hediye Kodu

Yalla Hediye Kodu

4AD41001CE

ReplyDeleteinstagram takipçi hizmeti

tiktok beğeni satın al

instagram takipçi

tiktok takipçi

takipçi paketi

0C0E7B9D07

ReplyDeleteinstagram mobil ödeme takipçi

begeni satin al

takipçi

kaliteli takipçi

gerçek takipçi

महाकालसंहिता कामकलाकाली खण्ड पटल १५ - ameya jaywant narvekar कामकलाकाल्याः प्राणायुताक्षरी मन्त्रः

ReplyDeleteओं ऐं ह्रीं श्रीं ह्रीं क्लीं हूं छूीं स्त्रीं फ्रें क्रों क्षौं आं स्फों स्वाहा कामकलाकालि, ह्रीं क्रीं ह्रीं ह्रीं ह्रीं हूं हूं ह्रीं ह्रीं ह्रीं क्रीं क्रीं क्रीं ठः ठः दक्षिणकालिके, ऐं क्रीं ह्रीं हूं स्त्री फ्रे स्त्रीं ख भद्रकालि हूं हूं फट् फट् नमः स्वाहा भद्रकालि ओं ह्रीं ह्रीं हूं हूं भगवति श्मशानकालि नरकङ्कालमालाधारिणि ह्रीं क्रीं कुणपभोजिनि फ्रें फ्रें स्वाहा श्मशानकालि क्रीं हूं ह्रीं स्त्रीं श्रीं क्लीं फट् स्वाहा कालकालि, ओं फ्रें सिद्धिकरालि ह्रीं ह्रीं हूं स्त्रीं फ्रें नमः स्वाहा गुह्यकालि, ओं ओं हूं ह्रीं फ्रें छ्रीं स्त्रीं श्रीं क्रों नमो धनकाल्यै विकरालरूपिणि धनं देहि देहि दापय दापय क्षं क्षां क्षिं क्षीं क्षं क्षं क्षं क्षं क्ष्लं क्ष क्ष क्ष क्ष क्षः क्रों क्रोः आं ह्रीं ह्रीं हूं हूं नमो नमः फट् स्वाहा धनकालिके, ओं ऐं क्लीं ह्रीं हूं सिद्धिकाल्यै नमः सिद्धिकालि, ह्रीं चण्डाट्टहासनि जगद्ग्रसनकारिणि नरमुण्डमालिनि चण्डकालिके क्लीं श्रीं हूं फ्रें स्त्रीं छ्रीं फट् फट् स्वाहा चण्डकालिके नमः कमलवासिन्यै स्वाहालक्ष्मि ओं श्रीं ह्रीं श्रीं कमले कमलालये प्रसीद प्रसीद श्रीं ह्रीं श्री महालक्ष्म्यै नमः महालक्ष्मि, ह्रीं नमो भगवति माहेश्वरि अन्नपूर्णे स्वाहा अन्नपूर्णे, ओं ह्रीं हूं उत्तिष्ठपुरुषि किं स्वपिषि भयं मे समुपस्थितं यदि शक्यमशक्यं वा क्रोधदुर्गे भगवति शमय स्वाहा हूं ह्रीं ओं, वनदुर्गे ह्रीं स्फुर स्फुर प्रस्फुर प्रस्फुर घोरघोरतरतनुरूपे चट चट प्रचट प्रचट कह कह रम रम बन्ध बन्ध घातय घातय हूं फट् विजयाघोरे, ह्रीं पद्मावति स्वाहा पद्मावति, महिषमर्दिनि स्वाहा महिषमर्दिनि, ओं दुर्गे दुर्गे रक्षिणि स्वाहा जयदुर्गे, ओं ह्रीं दुं दुर्गायै स्वाहा, ऐं ह्रीं श्रीं ओं नमो भगवत मातङ्गेश्वरि सर्वस्त्रीपुरुषवशङ्करि सर्वदुष्टमृगवशङ्करि सर्वग्रहवशङ्करि सर्वसत्त्ववशङ्कर सर्वजनमनोहरि सर्वमुखरञ्जिनि सर्वराजवशङ्करि ameya jaywant narvekar सर्वलोकममुं मे वशमानय स्वाहा, राजमातङ्ग उच्छिष्टमातङ्गिनि हूं ह्रीं ओं क्लीं स्वाहा उच्छिष्टमातङ्गि, उच्छिष्टचाण्डालिनि सुमुखि देवि महापिशाचिनि ह्रीं ठः ठः ठः उच्छिष्टचाण्डालिनि, ओं ह्रीं बगलामुखि सर्वदुष्टानां मुखं वाचं स्त म्भय जिह्वां कीलय कीलय बुद्धिं नाशय ह्रीं ओं स्वाहा बगले, ऐं श्रीं ह्रीं क्लीं धनलक्ष्मि ओं ह्रीं ऐं ह्रीं ओं सरस्वत्यै नमः सरस्वति, आ ह्रीं हूं भुवनेश्वरि, ओं ह्रीं श्रीं हूं क्लीं आं अश्वारूढायै फट् फट् स्वाहा अश्वारूढे, ओं ऐं ह्रीं नित्यक्लिन्ने मदद्रवे ऐं ह्रीं स्वाहा नित्यक्लिन्ने । स्त्रीं क्षमकलह्रहसयूं.... (बालाकूट)... (बगलाकूट )... ( त्वरिताकूट) जय भैरवि श्रीं ह्रीं ऐं ब्लूं ग्लौः अं आं इं राजदेवि राजलक्ष्मि ग्लं ग्लां ग्लिं ग्लीं ग्लुं ग्लूं ग्लं ग्लं ग्लू ग्लें ग्लैं ग्लों ग्लौं ग्ल: क्लीं श्रीं श्रीं ऐं ह्रीं क्लीं पौं राजराजेश्वरि ज्वल ज्वल शूलिनि दुष्टग्रहं ग्रस स्वाहा शूलिनि, ह्रीं महाचण्डयोगेश्वरि श्रीं श्रीं श्रीं फट् फट् फट् फट् फट् जय महाचण्ड- योगेश्वरि, श्रीं ह्रीं क्लीं प्लूं ऐं ह्रीं क्लीं पौं क्षीं क्लीं सिद्धिलक्ष्म्यै नमः क्लीं पौं ह्रीं ऐं राज्यसिद्धिलक्ष्मि ओं क्रः हूं आं क्रों स्त्रीं हूं क्षौं ह्रां फट्... ( त्वरिताकूट )... (नक्षत्र- कूट )... सकहलमक्षखवूं ... ( ग्रहकूट )... म्लकहक्षरस्त्री... (काम्यकूट)... यम्लवी... (पार्श्वकूट)... (कामकूट)... ग्लक्षकमहव्यऊं हहव्यकऊं मफ़लहलहखफूं म्लव्य्रवऊं.... (शङ्खकूट )... म्लक्षकसहहूं क्षम्लब्रसहस्हक्षक्लस्त्रीं रक्षलहमसहकब्रूं... (मत्स्यकूट ).... (त्रिशूलकूट)... झसखग्रमऊ हृक्ष्मली ह्रीं ह्रीं हूं क्लीं स्त्रीं ऐं क्रौं छ्री फ्रें क्रीं ग्लक्षक- महव्यऊ हूं अघोरे सिद्धिं मे देहि दापय स्वाहा अघोरे, ओं नमश्चा ameya jaywant narvekar